Make sure you check the syllabus for the due date. Please use the notations adopted in class, even if the problem is stated in the book using a different notation.

SpamBase-Poluted dataset:

the same datapoints as in the original Spambase dataset, only with

a lot more columns (features) : either random values, or somewhat

loose features, or duplicated original features.

SpamBase-Poluted with missing values dataset: train,

test.

Same dataset, only some values (picked at random) have been

deleted.

Extract Harr features for each image on the Digits Dataset

(Training data,

labels.

Testing data,

labels).

Train 10-class ECOC-Boosting on the extracted features and report

performance.

(HINT: For parsing MNIST dataset, please see python Code:mnist.py;

MATLAB code: MNIST_Dataset)

A) Run Boosting (Adaboost or Rankboost or Gradient Boosting) to

text documents from 20 Newsgroups without extracting features in

advance. Extract features for each round of boosting based on

current boosting weights.

B) Run Boosting (Adaboost or Rankboost or Gradient Boosting) to

image datapints from Digit Dataset without extracting features in

advance. Extract features for each round of boosting based on

current boosting weights. You can follow this paper.

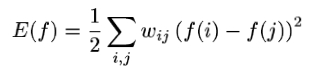

Prove of the harmonic functions property discussed in class based on this paper. Specifically, prove that to minimize the energy function

f must be harmonic, i.e. for all unlabeled datapoints j, it must satisfy