CS6140 Machine Learning

HW5 - Features

Make sure you check the syllabus

for the due date. Please use the notations adopted in class, even

if the problem is stated in the book using a different notation.

SpamBase-Poluted dataset:

the same datapoints as in the original Spambase dataset, only with

a lot more columns (features) : either random values, or somewhat

loose features, or duplicated original features.

SpamBase-Poluted with missing values dataset: train,

test.

Same dataset, except some values (picked at random) have been

deleted.

Digits Dataset (Training data,

labels. Testing data,

labels): about 60,000 images, each 28x28 pixels representing

digit scans. Each image is labeled with the digit represented, one

of 10 classes: 0,1,2,...,9.

PROBLEM 5 : Implement Kernel PCA for linear regression (Optional, no credit)

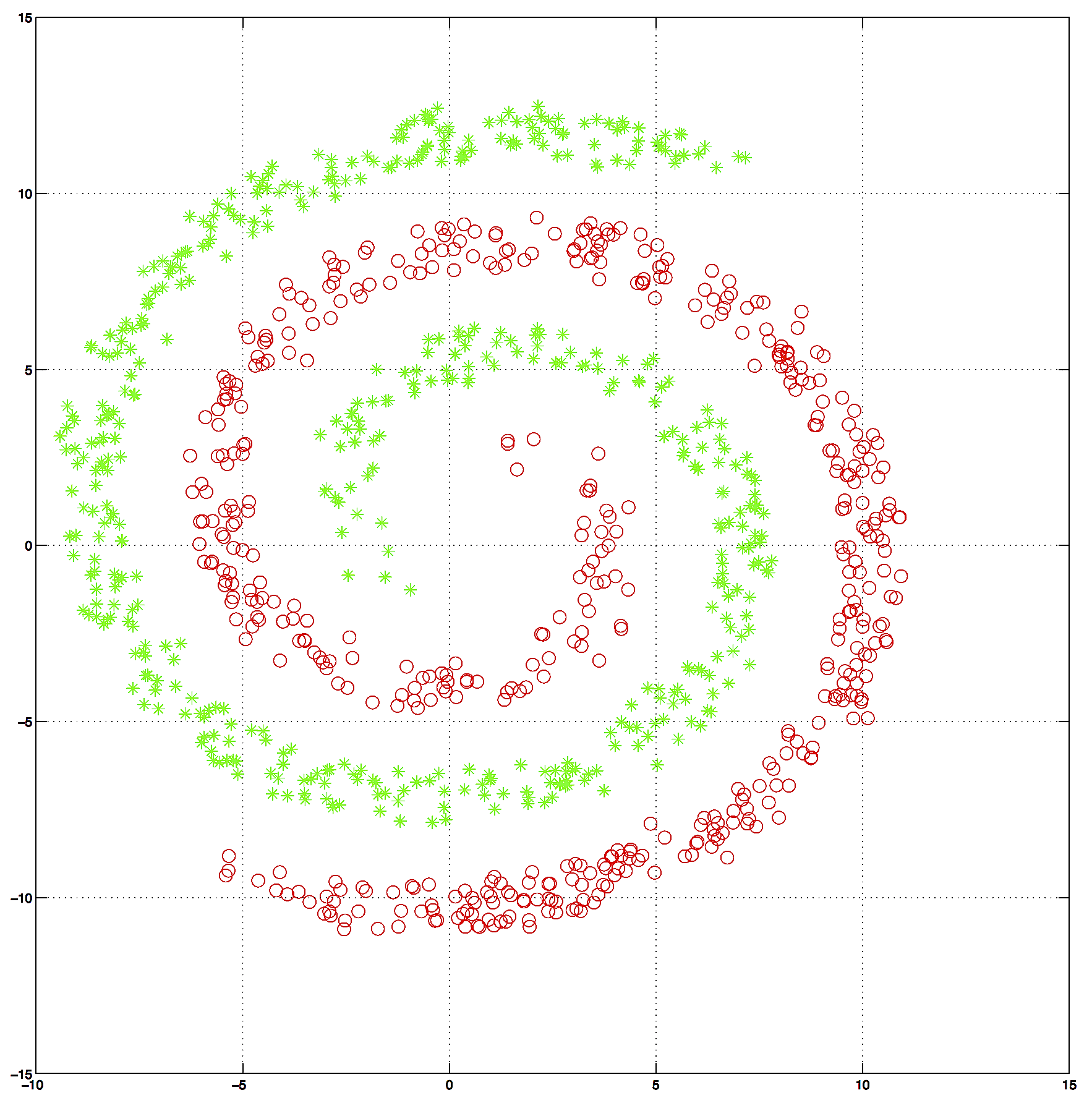

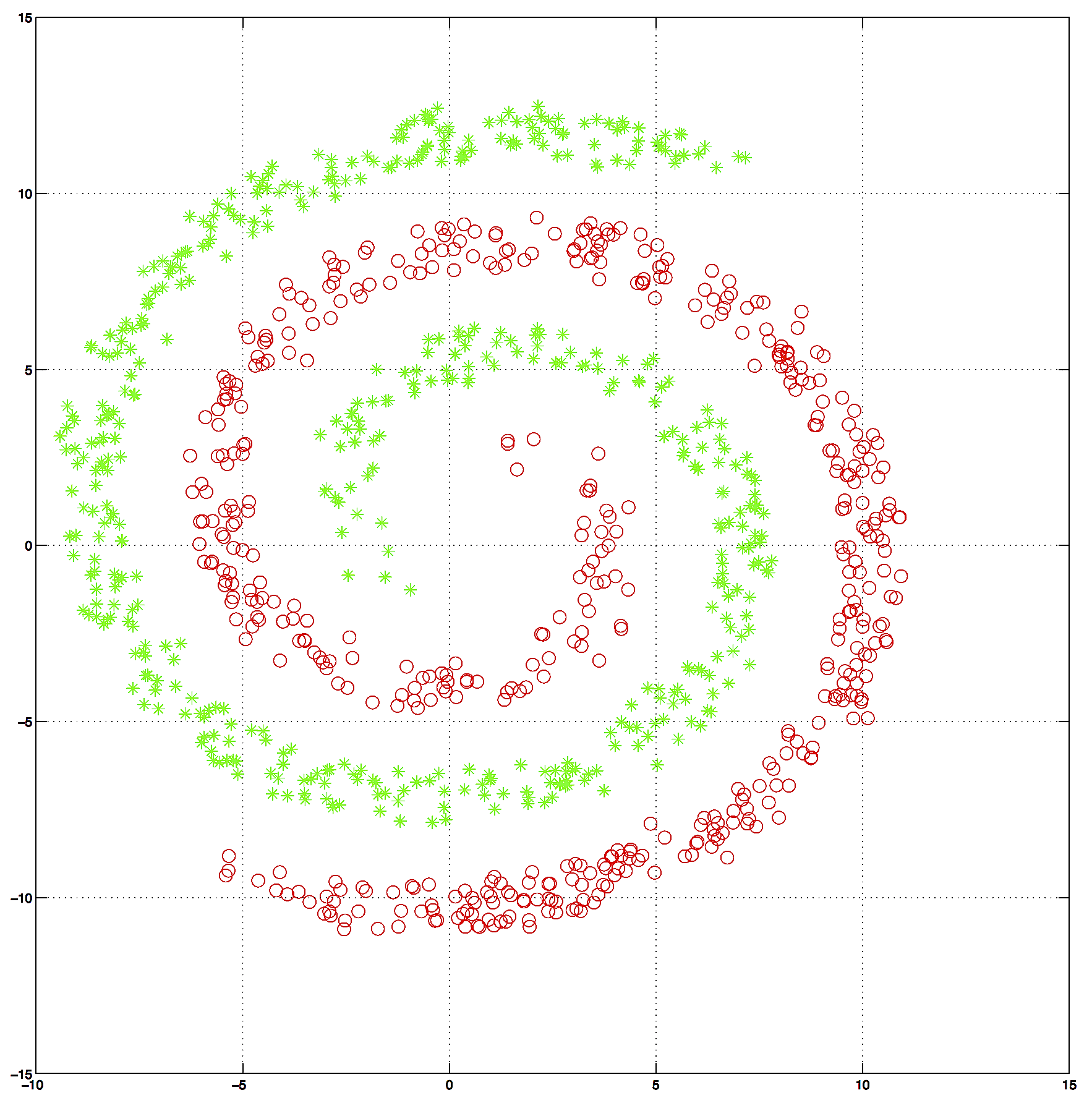

Dataset: 1000 2-dim datapoints TwoSpirals

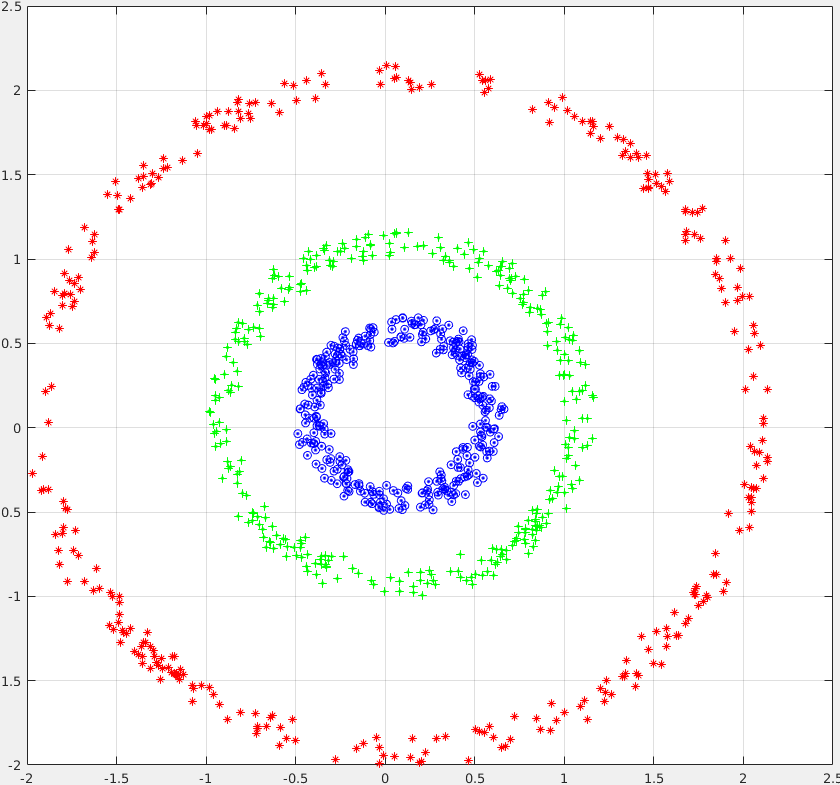

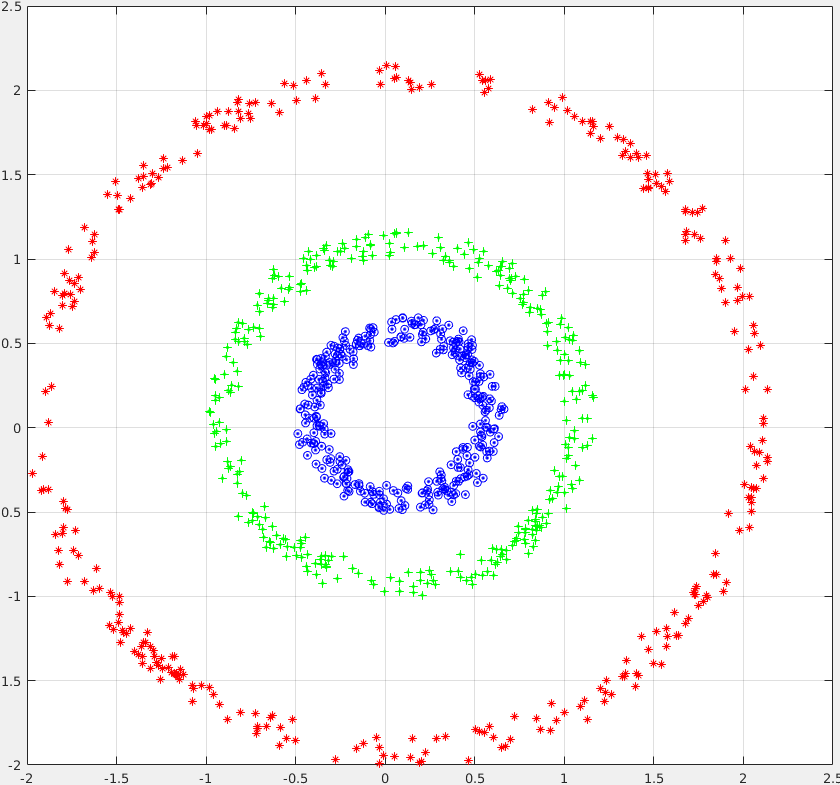

Dataset: 1000 2-dim datapoints ThreeCircles

A) First, train a Linear Regression (library) and confirm that it doesnt work , i.e. it has a high classification error or high Root Mean Squared Error.

B) Run KernelPCA with Gaussian Kernel to obtain a representation of T features. For reference these steps we demoed in class (Matlab):

%get pairwise squared euclidian distance

X2 = dot(X,X,2);

DIST_euclid = bsxfun(@plus, X2, X2') - 2 * X * X';

% get a kernel matrix NxN

sigma = 3;

K = exp(-DIST_euclid/sigma);

%normalize the Kernel to correspond to zero-mean

U = ones(N)/ N ;

Kn = K - U*K -K*U + U*K*U ;

% obtain kernel eignevalues, vectors; then sort them with largest eig first

[V,D] = eig(Kn,'vector') ;

[D,sorteig] = sort(D,'descend') ;

V = V(:, sorteig);

% get the projection matrix

XG = Kn*V';

%get first 3 dimmensions

X3G = XG(:,1:3);

%get first 20 dimmensions

X20G = XG(:,1:20);

%get first 100 dimmensions

X100G = XG(:,1:100);

C) Retrain Linear regression on the transformed D-dim data. How large D needs to be to get good performance?