This is an open book, open notes exam. Please put all your answers in the blue answer book. You may keep your copy of this question sheet.

Question 1. Decision tree learning This is similar to the restaurant choice example in AIMA. The eleven examples in the table below deal with a decision as to whether or not to adopt a dog. The goal predicate, the decision to adopt, is binary, Yes or No. The five attributes and their possible values are:

Goal Example || Size | Age | Short | Quiet | HB | Adopt --------------------------------------------------- X1 || L | A | No | No | Yes | No --------------------------------------------------- X2 || S | J | Yes | No | Yes | Yes --------------------------------------------------- X3 || M | P | Yes | Yes | No | Yes --------------------------------------------------- X4 || L | A | No | No | Yes | No --------------------------------------------------- X5 || L | A | No | Yes | Yes | Yes --------------------------------------------------- X6 || M | P | No | No | Yes | Yes --------------------------------------------------- X7 || L | P | Yes | Yes | No | No --------------------------------------------------- X8 || L | J | Yes | Yes | Yes | Yes --------------------------------------------------- X9 || S | A | No | No | Yes | No --------------------------------------------------- X10 || M | J | No | Yes | Yes | Yes --------------------------------------------------- X11 || S | P | Yes | Yes | No | No ---------------------------------------------------

What you are to do:

First: Use Size as the only attribute to split the examples.

How well does this separate the examples?

Second: Omitting the data from X9, create a decision tree, using Size

to split at the root and Quiet as the next splitting attribute. Does this tree successfully

separate the answers (goal)? Next, test the tree on X9 and explain what

occurs.

Third: Build your own decision tree, choosing whatever attribute you want for

the root and choosing different attributes for the diffferent subtrees

in an effort to minimize the total depth of the tree.

Of course your search for the best solution

cannot be exhaustive (!) Simply do a bit of experimentation to come up

with something suboptimal, but reasonable. Discuss what you did and what you found.

Question 2. Backtracking search for constraint satisfaction. This problem is similar to the map-coloring problem of Chap. 5. Assume there is a long enclosure divided linearly into four sections, S1, S2, S3, and S4. The task is to assign three possible organisms to the sections in such a way that the following constraints are satisfied for the three organisms: Bunny, Wolfy, and Plant.

Draw and discuss two different backtracking trees and how they search for and discover possible assignments that satisfy the three constraints listed above.

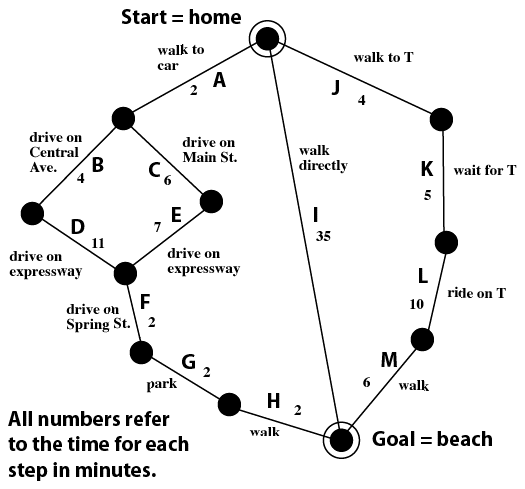

Question 3. Search strategies for exploring routes. The route map below shows a variety of routes from the Start State = Home, to the Goal State = the Beach. The number on each link is the time in minutes for each step. Your overall goal is to find the fastest route, the one with the smallest total time. The most important warning about these searches is that the search tree nodes correspond to the links in the map, not the nodes in the map. For your convenience, I have labeled each link with a single letter.

First: Draw a complete depth-first search tree showing the order you have chosen for expanding nodes. How many nodes have to be expanded until the shortest time solution is found? Is there any point(s) at which the search can be pruned based on what has been found so far? Is there some point, short of a full search, that the search can be terminated with certainty? Whether or not you can prune or terminate early discuss how a such opportunities might arise.

Second:Draw the trees used for iterative deepening depth-first search and discuss it in detail in the same way you did for depth-first search. What advantage did this search have for this problem? Whether or not it did have an advantage, discuss its advantages and disadvantages in general, compared to both depth-first and breadth-first search.

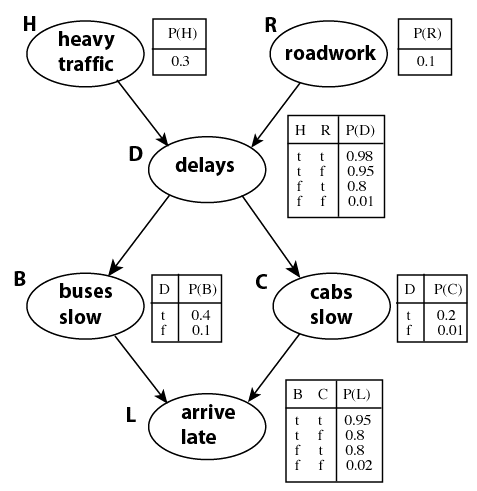

Question 4. Bayesian networks The diagram below shows a Bayesian network similar to FIg. 14.2 in AIMA.

Your task: Assume that the following values are known: H=t, R=f, and C=t. Evaluate p(L), given this knowledge. This will require you to sum over appropriate probabilities for the four cases for the remaining variables, D and B.

Question 5. Resolution and model checking in Propositional Logic. You are given the following assertions and their symbols:

Here is the argument and the statement to be proved (or disproved):

First part: Convert the statements to clausal form and the entire set of statements to conjunctive normal form. Add ¬C and use resolution in an attempt to prove C. Note that if an exhaustive seach using all resolvents does not lead to a contradiction, then C does not follow from the premises.

Second part: Represent the the conjunction of the three argument statements or their equivalenet clauses by Φ. If the conclusion C is not in fact valid, then there should be at least one model in which Φ∧¬C is true. The table below is the beginning of model checking for this statement. Fill in the remaining six cases, always assuming that C is false (the only relevant value). You should find four cases for which Φ∧¬C is true. Discuss how your results here relate to proof in general and to your resolution-based proof in particular.

J | Y | S | C | Φ∧¬C | ----------------------- 0 | 0 | 0 | 0 | 0 | ----------------------- 0 | 0 | 1 | 0 | 0 | ----------------------- Etc. -----------------------

Question 6. EXTRA CREDIT Resolution problems in FOL. You have two tasks. The first is to convert the statements below to CNF. The next step is to negate the desired conclusion, Marcus hates Caesar and use resolution to reach a contradiction, if you can.

Go to CSG120 home page. or RPF's Teaching Gateway or homepage