J.P. Lewis, Ruth Rosenholtz, Nickson Fong, and Ulrich Neumann

Although existing GUIs have a sense of space, they provide no sense of place. Our paper presents a fundamentally graphics based approach to this ‘lost in hyperspace’ problem. Specifically, we propose that spatial display of files is not sufficient to engage our visual skills; scenery (distinctive visual appearance) is needed as well. While scenery (in the form of custom icon assignments) is already possible in current op- erating systems, few if any users take the time to manually assign icons to all their files. As such, our proposal is to generate visually distinctive icons (“VisualIDs”) automatically, while allowing the user to replace the icon if desired. The paper discusses psychological and conceptual issues relating to icons, visual memory, and the necessary relation of scenery to data. A particular icon generation algorithm is described; subjects using these icons in simulated file search and recall tasks show significantly improved performance with little effort.

Doodle Icons

Junhwan Kim and Fabio Pellacini

This paper introduces a new kind of mosaic, called Jigsaw Image Mosaic (JIM), where image tiles of arbitrary shape are used to compose the final picture. The generation of a Jigsaw Image Mosaic is a solution to the following problem: given an arbitrarily-shaped container image and a set of arbitrarily-shaped image tiles, fill the container as compactly as possible with tiles of similar color to the container taken from the input set while optionally deforming them slightly to achieve a more visually- pleasing effect. We approach the problem by defining a mosaic as the tile configuration that minimizes a mosaicing energy function. We introduce a general energy-based framework for mosaicing problems that extends some of the existing algorithms such as Photomosaics and Simulated Decorative Mosaics. We also present a fast algorithm to solve the mosaicing problem at an acceptable computational cost. We demonstrate the use of our method by applying it to a wide range of container images and tiles.

Jigsaw Image Mosaics

Stephen Brooks and Neil Dodgson

We present a simple method of interactive texture editing that utilizes self-similarity to replicate intended operations globally over an image. Inspired by the recent successes of hierarchical approaches to texture synthesis, this method also uses multi-scale neighborhoods to assess the similarity of pixels within a texture. However, neighborhood matching is not employed to generate new instances of a texture. We instead locate similar neighborhoods for the purpose of replicating editing operations on the original texture itself, thereby creating a fundamentally new texture. This general approach is applied to texture painting, cloning and warping. These global operations are performed interactively, most often directed with just a single mouse movement.

Similarity Based Warping

Kevin G Suffern

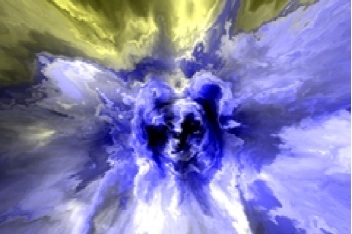

This sketch presents fractal art work created by ray tracing the specular highlights of point lights on the inside surface of a hollow sphere. The sphere has a mirror surface on the inside that contributes no colour to the images, but there is spread in the local specular reflection. The resulting images consist entirely of specular highlights and their reflections. I call this painting with light because, although recursive ray tracing is used, no objects are visible.

Use of depth lights, recursion depth = 12

Ryan M. Geiss

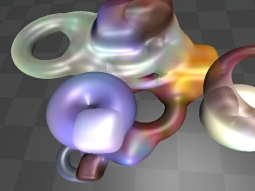

To review what a metaball is, and how to render them; and to describe a new optimization I've made for rendering metaballs. For complex scenes, it can render hundreds of times faster, though I'm certain others have discovered this trick as well. It does very slightly change the characteristic shape of the blobs, but there is no loss in image quality whatsoever.

Metaballs

Alejo Hausner

This paper presents a method for simulating decorative tile mosaics. Such mosaics are challenging because the square tiles that comprise them must be packed tightly and yet must follow orientations chosen by the artist. Based on an existing image and user-selected edge features, the method can both reproduce the imageís colours and emphasize the selected edges by placing tiles that follow the edges. The method uses centroidal voronoi diagrams which normally arrange points in regular hexagonal grids. By measuring distances with an manhattan metric whose main axis is adjusted locally to follow the chosen direction field, the centroidal diagram can be adapted to place tiles in curving square grids instead. Computing the centroidal voronoi diagram is made possible by leveraging the z-buffer algorithm available in many graphics cards.

Mosaic stained glass, with dark leading lines emphasized

Henrik Wann Jensen, Fredo Durand, Michael M. Stark, Simon Premoze, Julie Dorsey, and Peter Shirley

This paper presents a physically-based model of the night sky for realistic image synthesis. We model both the direct appearance of the night sky and the illumination coming from the Moon, the stars, the zodiacal light, and the atmosphere. To accurately predict the appearance of night scenes we use physically-based astronomical data, both for position and radiometry. The Moon is simulated as a geometric model illuminated by the Sun, using recently measured elevation and albedo maps, as well as a specialized BRDF. For visible stars, we include the position, magnitude, and temperature of the star, while for the Milky Way and other nebulae we use a processed photograph. Zodiacal light due to scattering in the dust covering the solar system, galactic light, and airglow due to light emission of the atmosphere are simulated from measured data. We couple these components with an accurate simulation of the atmosphere. To demonstrate our model, we show a variety of night scenes rendered with a Monte Carlo ray tracer.

Moon rising above a mountain ridge. The only visible feature in this dark night scene is the scattering of light in the thin cloud layer.

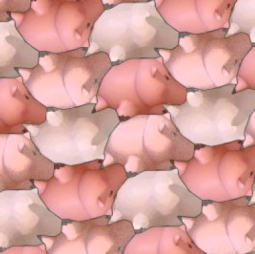

Craig S. Kaplan and David H. Salesin

This paper introduces and presents a solution to the Escherization problem: given a closed figure in the plane, find a new closed figure that is similar to the original and tiles the plane. Our solution works by using a simulated annealer to optimize over a parameterization of the isohedral tilings, a class of tilings that is flexible enough to encompass nearly all of Escherís own tilings, and yet simple enough to be encoded and explored by a computer. We also describe a representation for isohedral tilings that allows for highly interactive viewing and rendering. We demonstrate the use of these tools, along with several additional techniques for adding decorations to tilings, with a variety of original ornamental designs.

Escherized pigs

Li-Yi Wei and Marc Levoy

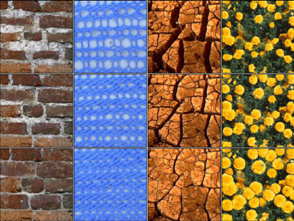

Texture synthesis is important for many applications in computer graphics, vision, and image processing. However, it remains difficult to design an algorithm that is both efficient and capable of generating high quality results. In this paper, we present an efficient algorithm for realistic texture synthesis. The algorithm is easy to use and requires only a sample texture as input. It generates textures with perceived quality equal to or better than those produced by previous techniques, but runs two orders of magnitude faster. This permits us to apply texture synthesis to problems where it has traditionally been considered impractical. In particular, we have applied it to constrained synthesis for image editing and temporal texture generation. Our algorithm is derived from Markov Random Field texture models and generates textures through a deterministic searching process. We accelerate this synthesis process using tree-structured vector quantization.

Texture synthesized from a small sample and random noise

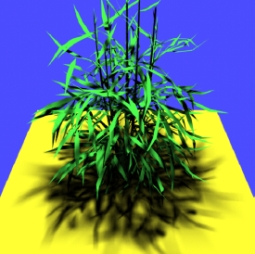

Maneesh Agrawala, Ravi Ramamoorthi, Alan Heirich, and Laurent Moll

We present two efficient image-based approaches for computation and display of high-quality soft shadows from area light sources. Our methods are related to shadow maps and provide the associated benefits. The computation time and memory requirements for adding soft shadows to an image depend on image size and the number of lights, not geometric scene complexity. We also show that because area light sources are localized in space, soft shadow computations are particularly well suited to image-based rendering techniques. Our first approachólayered attenuation mapsó achieves interactive rendering rates, but limits sampling flexibility, while our second methodócoherence-based raytracing of depth imagesóis not interactive, but removes the limitations on sampling and yields high quality images at a fraction of the cost of conventional raytracers. Combining the two algorithms allows for rapid previewing followed by efficient high-quality rendering.

Plant rendered using efficient high-quality coherence-based raytracing approach

Manuel M. Oliveira, Gary Bishop, and David McAllister

We present an extension to texture mapping that supports the representation of 3-D surface details and view motion parallax. The results are correct for viewpoints that are static or moving, far away or nearby. Our approach is very simple: a relief texture (texture extended with an orthogonal displacement per texel) is mapped onto a polygon using a two-step process: First, it is converted into an ordinary texture using a surprisingly simple 1-D forward transform. The resulting texture is then mapped onto the polygon using standard texture mapping. The 1-D warping functions work in texture coordinates to handle the parallax and visibility changes that result from the 3-D shape of the displacement surface. The subsequent texture-mapping operation handles the transformation from texture to screen coordinates.

Town rendered using relief texture mapping. Notice the bricks standing out and the protruding dormers.

Yoshinori Dobashi, Kazufumi Kaneda, Hideo Yamashita, Tsuyoshi Okita, and Tomoyuki Nishita

This paper proposes a simple and computationally inexpensive method for animation of clouds. The cloud evolution is simulated using cellular automaton that simplifies the dynamics of cloud formation. The dynamics are expressed by several simple transition rules and their complex motion can be simulated with a small amount of computation. Realistic images are then created using one of the standard graphics APIs, OpenGL. This makes it possible to utilize graphics hardware, resulting in fast image generation. The proposed method can realize the realistic motion of clouds, shadows cast on the ground, and shafts of light through clouds.

Cloud formation around mountains

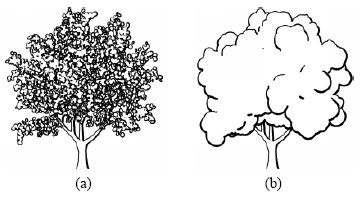

Oliver Deussen and Thomas Strothotte

We present a method for automatically rendering pen-and-ink illustrations of trees. A given 3-d tree model is illustrated by the tree skeleton and a visual representation of the foliage using abstract drawing primitives. Depth discontinuities are used to determine what parts of the primitives are to be drawn; a hybrid pixel-based and analytical algorithm allows us to deal efficiently with the complex geometric data. Using the proposed method we are able to generate illustrations with different drawing styles and levels of abstraction. The illustrations generated are spatial coherent, enabling us to create animations of sketched environments. Applications of our results are found in architecture, animation and landscaping.

Tree rendered with varying disk size and depth difference threshold: a) size=0.15, threshold=1000; b) size=0.7, threshold= 2000.

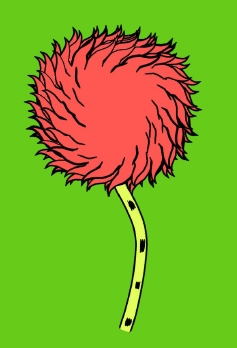

Michael A. Kowalski, Lee Markosian, J.D. Northrup, Lubomir Bourdevy, Ronen Barzelz, Loring S. Holden, and John F. Hughes

Artists and illustrators can evoke the complexity of fur or vegetation with relatively few well-placed strokes. We present an algorithm that uses strokes to render 3D computer graphics scenes in a stylized manner suggesting the complexity of the scene without representing it explicitly. The basic algorithm is customizable to produce a range of effects including fur, grass and trees, as we demonstrate in this paper and accompanying video. The algorithm is implemented within a broader framework that supports procedural stroke-based textures on polyhedral models. It renders moderately complex scenes at multiple frames per second on current graphics workstations, and provides some interframe coherence.

Truffula Treetop with Static Graftals Organized in a Three-level Hierarchy

Victor Ostromoukhov

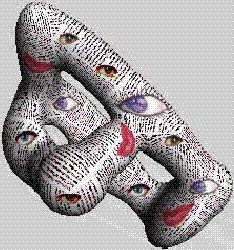

This contribution introduces the basic techniques for digital facial engraving, which imitates traditional copperplate engraving. Inspired by traditional techniques, we first establish a set of basic rules thanks to which separate engraving layers are built on the top of the original photo. Separate layers are merged according to simple merging rules and according to range shift/scale masks specially introduced for this purpose. We illustrate the introduced technique by a set of black/white and color engravings, showing different features such as engraving-specific image enhancements, mixing different regular engraving lines with mezzotint, irregular perturbations of engraving lines etc. We introduce the notion of engraving style which comprises a set of separate engraving layers together with a set of associated range shift/scale masks. The engraving style helps to port the look and feel of one engraving to another. Once different libraries of pre-defined mappable engraving styles and an appropriate user interface are added to the basic system, producing a decent gravure starting from a simple digital photo will be a matter of seconds. The engraving technique described in this contribution opens new perspectives for digital art, adding unprecedented power and precision to the engraverís work.

Face Engraving

Oliver Deussen, Pat Hanrahan, Bernd Lintermann, Radomir Mech, Matt Pharr, and Przemyslaw Prusinkiewicz

pages 275-286

Modeling and rendering of natural scenes with thousands of plants poses a number of problems. The terrain must be modeled and plants must be distributed throughout it in a realistic manner, reflecting the interactions of plants with each other and with their environment. Geometric models of individual plants, consistent with their po-sitions within the ecosystem, must be synthesized to populate the scene. The scene, which may consist of billions of primitives, must be rendered efficiently while incorporating the subtleties of lighting in a natural environment. We have developed a system built around a pipeline of tools that address these tasks. The terrain is designed using an interactive graphical editor. Plant distribution is determined by hand (as one would do when designing a garden), by ecosystem simulation, or by a combination of both techniques. Given parametrized procedural models of individual plants, the geometric complexity of the scene is reduced by approximate instancing, in which similar plants, groups of plants, or plant organs are replaced by instances of representative objects before the scene is rendered. The paper includes examples of visually rich scenes synthesized using the system.

A Lychnis coronaria field after 99 and 164 simulation steps

Henrik Wann Jensen Per H. Christensen

pages 423-434

This paper presents a new method for computing global il- lumination in scenes with participating media. The method is based on bidirectional Monte Carlo ray tracing and uses photon maps to increase eciency and reduce noise. We re- move previous restrictions limiting the photon map method to surfaces by introducing a volume photon map contain- ing photons in participating media. We also derive a new radiance estimate for photons in the volume photon map. The method is fast and simple, but also general enough to handle nonhomogeneous media and anisotropic scattering. It can eciently simulate e ects such as multiple volume scattering, color bleeding between volumes and surfaces, and volume caustics (light re ected from or transmitted through specular surfaces and then scattered by a medium). The photon map is decoupled from the geometric representa- tion of the scene, making the method capable of simulat- ing global illumination in scenes containing complex objects. These objects do not need to be tessellated; they can be in- stanced, or even represented by an implicit function. Since the method is based on a bidirectional simulation, it au- tomatically adapts to illumination and view. Furthermore, because the use of photon maps reduces noise and aliasing, the method is suitable for rendering of animations.

Dusty room illuminated by sunlight through a stained glass window.

Bruno Levy and Jean-Laurent Mallet

pages 343-352

This article introduces new techniques for non-distorted texture mapping on complex triangulated meshes. Texture coordinates are assigned to the vertices of the triangulation by using an iterative op-timization algorithm, honoring a set of constraints minimizing the distortions. As compared to other global optimization techniques, our method allows the user to specify the surface zones where dis-tortions should be minimized in order of preference. The modular approach described in this paper results in a highly flexible method, facilitating a customized mapping construction. For instance, it is easy to align the texture on the surface with a set of user defined isoparametric curves. Moreover, the mapping can be made con-tinuous through cuts, allowing to parametrize in one go complex cut surfaces. It is easy to specify other constraints to be honored by the so-constructed mappings, as soon as they can be expressed by linear (or linearizable) relations. This method has been inte-grated successfully within a widely used C.A.D. software dedicated to geosciences. In this context, applications of the method com-prise numerical computations of physical properties stored in fine grids within texture space, unfolding geological layers and generat-ing grids that are suitable for finite element analysis. The impact of the method could be also important for 3D paint systems.

Texture mapping on a face.

Michael T. Wong, Douglas E. Zongker, and David H. Salesin

pages 423-434

This paper describes some of the principles of traditional floral ornamental design, and ex-plores ways in which these designs can be created algorithmically. It introduces the idea of "adaptive clip art," which encapsulates the rules for creating a specific ornamental pattern. Adaptive clip art can be used to generate pat-terns that are tailored to fit a particularly shaped region of the plane. If the region is resized or re-shaped, the ornament can be automatically re-generated to fill this new area in an appropriate way. Our ornamental patterns are created in two steps: first, the geometry of the pattern is gen-erated as a set of two-dimensional curves and filled boundaries; second, this geometry is ren-dered in any number of styles. We demonstrate our approach with a variety of floral ornamental designs.

From the border on the first page of this article.

Wagner Toledo Correa, Robert J. Jensen, Craig E. Thayer, and Adam Finkelstein

pages 435-446

We present a method for applying complex textures to hand-drawn characters in cel animation. The method correlates features in a simple, textured, 3-D model with features on a hand-drawn figure, and then distorts the model to conform to the hand-drawn artwork. The process uses two new algorithms: a silhouette detection scheme and a depth-preserving warp. The silhouette detection algorithm is simple and efficient, and it produces continuous, smooth, visible contours on a 3-D model. The warp distorts the model in only two dimensions to match the artwork from a given camera perspective, yet preserves 3-D effects such as self-occlusion and foreshortening. The entire process allows animators to combine complex textures with hand-drawn artwork, leveraging the strengths of 3-D computer graphics while retaining the expressiveness of traditional hand-drawn cel animation.

A frame of cel animation with textured foreground character.

Amy Gooch, Bruce Gooch, Peter Shirley, and Elaine Cohen

pages 447-452

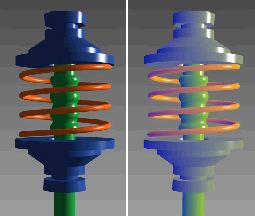

Phong-shaded 3D imagery does not provide geometric information of the same richness as human-drawn technical illustrations. A non-photorealistic lighting model is presented that attempts to nar-row this gap. The model is based on practice in traditional tech-nical illustration, where the lighting model uses both luminance and changes in hue to indicate surface orientation, reserving ex-treme lights and darks for edge lines and highlights. The light-ing model allows shading to occur only in mid-tones so that edge lines and highlights remain visually prominent. In addition, we show how this lighting model is modified when portraying models of metal objects. These illustration methods give a clearer picture of shape, structure, and material composition than traditional com-puter graphics methods.

a) Phong model for colored object. b) New shading model with highlights and cool-to-warm hue shift.

Aaron Hertzmann

pages 453-460

We present a new method for creating an image with a hand-painted appearance from a photograph, and a new approach to designing styles of illustration. We “paint” an image with a series of spline brush strokes. Brush strokes are chosen to match colors in a source image. A painting is built up in a series of layers, starting with a rough sketch drawn with a large brush. The sketch is painted over with progressively smaller brushes, but only in areas where the sketch differs from the blurred source image. Thus, visual emphasis in the painting corresponds roughly to the spatial energy present in the source image. We demonstrate a technique for painting with long, curved brush strokes, aligned to normals of image gradients. Thus we begin to explore the expressive quality of complex brush strokes. Rather than process images with a single manner of painting, we present a framework for describing a wide range of visual styles. A style is described as an intuitive set of parameters to the painting algorithm that a designer can adjust to vary the style of painting. We show examples of images rendered with different styles, and discuss long-term goals for expressive rendering styles as a general-purpose design tool for artists and animators.

Automatic painting with brush strokes

Tony DeRose, Michael Kass, and Tien Truong

pages 85-94

The creation of believable and endearing characters in computer graphics presents a number of technical challenges, including the modeling, animation and rendering of complex shapes such as heads, hands, and clothing. Traditionally, these shapes have been modeled with NURBS surfaces despite the severe topological re-strictions that NURBS impose. In order to move beyond these re-strictions, we have recently introduced subdivision surfaces into our production environment. Subdivision surfaces are not new, but their use in high-end CG production has been limited. Here we describe a series of developments that were required in order for subdivision surfaces to meet the demands of high-end production. First, we devised a practical technique for construct- ing provably smooth variable-radius fillets and blends. Second, we developed methods for using subdivision surfaces in clothing sim-ulation including a new algorithm for efficient collision detection. Third, we developed a method for constructing smooth scalar fields on subdivision surfaces, thereby enabling the use of a wider class of programmable shaders. These developments, which were used extensively in our recently completed short film Geri’s game,have become a highly valued feature of our production environment.

Geri

Jeremy S. De Bonet

pages 361-368

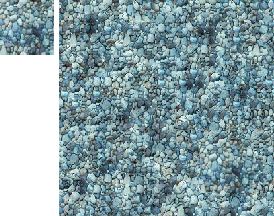

This paper outlines a technique for treating input texture images as probability density estimators from which new textures, with similar appearance and structural properties, can be sampled. In a two-phase process, the input texture is first analyzed by measuring the joint occurrence of texture discrimination features at multiple resolutions. In the second phase, a new texture is synthesized by sampling successive spatial frequency bands from the input texture, conditioned on the similar joint occurrence of features at lower spa-tial frequencies. Textures synthesized with this method more suc-cessfully capture the characteristics of input textures than do previ-ous techniques.

The large patch is different from the original (left) yet appears as though it could have been generated by the same underlying stochastic process.

Cassidy J. Curtis, Sean E. Anderson, Joshua E. Seims, Kurt W. Fleischer, and David H. Salesin

pages 421-430

This paper describes the various artistic effects of watercolor and shows how they can be simulated automatically. Our watercolor model is based on an ordered set of translucent glazes, which are created independently using a shallow-water fluid simulation. We use a Kubelka-Munk compositing model for simulating the optical effect of the superimposed glazes. We demonstrate how computer-generated watercolor can be used in three different applications: as part of an interactive watercolor paint system, as a method for automatic image "watercolorization," and as a mechanism for non-photorealistic rendering of three-dimensional scenes.

Simulated watercolor effects.

Steven M. Seitz and Charles R. Dyer

pages 21-30

Image morphing techniques can generate compelling 2D transitions between images. However, differences in object pose or viewpoint often cause unnatural distortions in image morphs that are difficult to correct manually. Using basic principles of projective geometry, this paper introduces a simple extension to image morphing that cor-rectly handles 3D projective camera and scene transformations. The technique, called view morphing, works by prewarping two images prior to computing a morph and then postwarping the interpolated images. Because no knowledge of 3D shape is required, the tech-nique may be applied to photographs and drawings, as well as ren-dered scenes. The ability to synthesize changes both in viewpoint and image structure affords a wide variety of interesting 3D effects via simple image transformations.

View morphing between two images of an object taken from two different viewpoints produces the illusion of physically moving a virtual camera.

Kurt W. Fleischer, David H. Laidlaw, Bena L. Currin, Alan H. Barr

pages 239-248

We propose an approach for modeling surface details such as scales, feathers, or thorns. These types of cellular textures require a rep-resentation with more detail than texture-mapping but are inconve-nient to model with hand-crafted geometry. We generate patterns of geometric elements using a biolog-ically- motivated cellular development simulation together with a constraint to keep the cells on a surface. The surface may be defined by an implicit function, a volume dataset, or a polygonal mesh. Our simulation combines and extends previous work in developmental models and constrained particle systems.

Cellular textures can handle unusual topologies.

Jason Weber and Joseph Penn

pages 119-128

Recent advances in computer graphics have produced images approaching the elusive goal of photorealism. Since many natural objects are so complex and detailed, they are often not rendered with convincing fidelity due to the difficulties in succinctly defining and efficiently rendering their geometry. With the increased demand of future simulation and virtual reality applications, the production of realistic natural-looking background objects will become increasingly more important. We present a model to create and render trees. Our emphasis is on the overall geometrical structure of the tree and not a strict adherence to botanical principles. Since the model must be utilized by general users, it does not require any knowledge beyond the principles of basic geometry. We also explain a method to seamlessly degrade the tree geometry at long ranges to optimize the drawing of large quantities of trees in forested areas.

Computer generated black tupelo tree.

Detlev Stalling and Hans-Christian Hege

pages 249-256

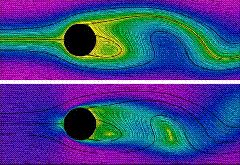

Line Integral Convolution (LIC) is a powerful technique for gener-ating striking images and animations from vector data. Introduced in 1993, the method has rapidly found many application areas, rang-ing from computer arts to scientific visualization. Based upon lo-cally filtering an input texture along a curved stream line segment in a vector field, it is able to depict directional information at high spatial resolutions. We present a new method for computing LIC images. It em-ploys simple box filter kernels only and minimizes the total num-ber of stream lines to be computed. Thereby it reduces computa-tional costs by an order of magnitude compared to the original algo-rithm. Our method utilizes fast, error-controlled numerical integra-tors. Decoupling the characteristic lengths in vector field grid, input texture and output image, it allows computation of filtered images at arbitrary resolution. This feature is of significance in computer animation as well as in scientific visualization, where it can be used to explore vector data by smoothly enlarging structure of details. We also present methods for improved texture animation, again employing box filter kernels only. To obtain an optimal motion ef-fect, spatial decay of correlation between intensities of distant pixels in the output image has to be controlled. This is achieved by blend-ing different phase-shifted box filter animations and by adaptively rescaling the contrast of the output frames.

Flow around a cylinder. Color depicts stream function (upper) and speed (lower).

Hans Kohling Pedersen

pages 291-300

This work presents a new general approach for applying textures onto implicit surfaces. Its main contributions are: An improved set of interactive tools for subdividing implicit surfaces into convenient patches. An efficient and reliable algorithm for deriving parameteri-zations for these patches. A new set of useful texturing operations. These results provide a unified representation scheme for a variety of texturing techniques that were previously incompatible, allow-ing more efficient manipulation, storage, and rendering of textured objects and a fuller use of current texture mapping hardware.

An implicit surface has been decorated by dragging, rotating, and scaling a single patch across it.

Xiaoyuan Tu and Demetri Terzopoulos

pages 43-50

This paper proposes a framework for animation that can achieve the intricacy of motion evident in certain natural ecosystems with minimal input from the animator. The realistic appearance, movement, and behavior of individual animals, as well as the behavior evident in groups of animals fall within the scope of this framework. Our approach to emulating this level of natural complexity is to model each animal holistically as an autonomous agent situated in its physical world. To demonstrate the approach, we develop a physics-based, virtual marine world. The world is inhabited by artificial fishes that can swim hydrodynamically in simulated water through the motor control of internal muscles that motivate fins. Their repertoire of behaviors relies on their perception of the dynamic environment. As in nature, the detailed motions of artificial fishes in their virtual habitat are not entirely predictable because they are not scripted.

Computer generated fish showing mating behavior.

Tom Reed and Brian Wyvill

pages 359-364

A method for rendering lightning using conventional raytracing techniques is discussed. The approach taken is directed at producing aesthetic images for animation, rather than providing a realistic physically based model for rendering. A particle system is used to generate the path of the lightning channel, and subsequently animate the lightning. A technique, using implicit surfaces, is used for illuminating objects struck by lightning.

Lightning over a plane of water.

Deborah R. Fowler, Ptzemyslaw Prusinkiewicz, and Johannes Battjes

pages 361-368

Plant organs are often arranged in spiral patterns. This effect is termed spiral phyllotaxis. Well known examples include the layout of seeds in a sunflower head and the arrangement of scales on a pineapple. This paper presents a method for modeling spiral phyllotaxis based on detecting and eliminating collisions between the organs while optimizing their packing. In contrast to geometric models previously used for computer graphics purposes, the new method arranges organs of varying sizes on arbitrary surfaces of revolution. Consequently, it can be applied to synthesize a wide range of natural plant structures.

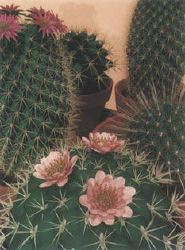

Table of cactii, including realistic models of Mammillaria spinosissima.

Deborah R. Fowler, Hans Meinhardt, and Ptzemyslaw Prusinkiewicz

pages 379-387

This paper presents a method for modeling seashells, suitable for image synthesis purposes. It combines a geometric description of shell shapes with an activator-inhibitor model of pigmentation patterns on shell surfaces. The technique is illustrated using models of selected shells found in nature.

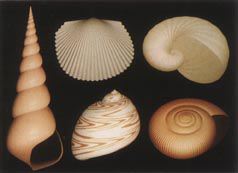

Variation of shell shapes resulting from different parameters of the helico-spiral.

John C. Hart and Thomas A. DeFanti

pages 91-100

Object instancing is the efficient method of representing an hierarchical object with a directed graph instead of a tree. If this graph contains a cycle then the object it rep resents is a linear fractal. Linear fractals are difficult to render for three specific reasons: (1) ray-fractal intersection is not trivial, (2) surface normals are undefined and (3) the object aliases at all sampling resolutions. Ray-fractal intersections are efficiently approximated to sub-pixel accuracy using procedural bounding volumes and a careful determination of the size of a pixel, giving the perception that the surface is infinitely detailed. Furthermore, a surface normal for these non-differentiable surfaces is defined and analyzed. Finally, the concept of antialiasing "covers" is adapted and used to solve the problem of sampling fractal surfaces. An initial bounding volume estimation method is also described, allowing a linear fractal to be rendered given only its iterated function system. A parallel implementation of these methods is described and applications of these results to the rendering of other fractal models are given.

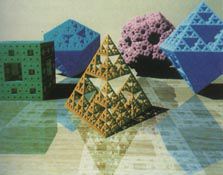

The five non-Platonic non-solids.

Andrew Witkin and Michael Kass

pages 299-308

We present a method for texture synthesis based on the simulation of a process of local nonlinear interaction, called reaction-diffusion, which has been proposed as a model of biological pattern formation. We extend traditional reaction-diffusion systems by allowing anisotropic and spatially non-uniform diffusion, as well as multiple competing directions of diffusion. We adapt reaction-diffusion systems to the needs of computer graphics by presenting a method to synthesize patterns which compensate for the effects of non-uniform surface parameterization. Finally, we develop efficient algorithms for simulating reaction- diffusion systems and display a collection of resulting textures using standard texture- and displacement-mapping techniques.

Texture Buttons: (a)giraffe, (b) coral, (c) scalloped.

Karl Sims

pages 319-328

This paper describes bow evolutionary techniques of variation and selection can be used to create complex simulated structures, textures, and motions for use in computer graphics and animation. Interactive selection, based on visual perception of procedurally generated results, allows the user to direct simulated evolutions in preferred directions. Several examples using these methods have been implemented and are described. 3D plant structures are grown using fixed sets of genetic parameters. Images, solid textures, and animations are created using mutating symbolic lisp expressions. Genotypes consisting of symbolic expressions are presented as an attempt to surpass the limitations of fixed-length genotypes with predefined expression rules. It is proposed that artificial evolution has potential as a powerful tool for achieving flexible complexity with a minimum of user input and knowledge of details.

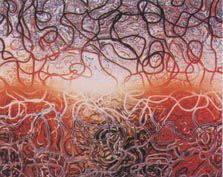

Now extinct mutation.

Ping-Kang Hsuing, Robert H. Thibadeau, and Robert H.P. Dunn

pages 10-18

What would the world look like if you were traveling at 99 percent of the speed of light? The physical principles and mathematical methods needed to answer this question have been understood for the better part of a century, ever since Einstein formulated the special theory of relativity in 1905. Only recently, however, has it become possible to create realistic images of a world seen at relativistic speeds. Some of these images reveal effects that defy intuition and that would have been very difficult to predict without the techniques of computer visualization. We have been investigating the appearance of the relativistic world with the aid of an algorithm we call REST-frame. ("REST" is an acronym for "relativistic effects in spacetime.") The algorithm relies on the long-established technique of ray-tracing to simulate a scene, but there is one crucial addition to the ray-tracing procedure: We take into account the finite speed of light and keep track of time as we trace a ray's path through space. From this one change the diverse effects of special relativity follow automatically.

Lattice moving toward the observer at .99c.

Leon Shirman and Yakov Kamen

Jan/Feb 1997

pages 60-66

Parametrization is an important yet complex step in texture mapping. The parametrization procedure associates the texture with the object and effectively controls texture placement on top of the object. It is also important in creating morphing and warping effects. Mathematically, parametrization is an ill-defined problem, since there are many possible ways to map a 2D image onto a surface of a 3D object, which may not be topologically equivalent to the rectangular texture space. Parametrization also remains the most unformalized procedure in texture mapping, although several promising ideas in this area have recently been published. We propose associating a 2D parameter space with a geometric object, thus simplifying and formalizing the parametrization process. This parameter space can be viewed as a natural generalization of the parameter space of parametric spline surfaces applied to general graphics primitives. The parametrization process therefore splits into two steps: geometric parametrization-binding a 2D parameter space to the object; and τ-mapping -- mapping from the parameter space to the texture space. As a result, binding -- the more complex procedure becomes independent of a texture map and needs to be performed only once per object. The second step of parametrization tau-mapping -- is mathematically well-defined.

Textured image produced with tau-mapping constructed using spline based interpolation

Przemyslaw Prusinkiewicz and Aristid Lindenmayer

Springer 1996

pages 1-30

Lindenmayer systems -- or L-systems for short -- were conceived as a mathematical theory of plant development. Originally, they did not include enough detail to allow for comprehensive modeling of higher plants. The emphasis was on plant topology, that is, the neighborhood relations between cells or larger plant modules. Their geometric aspects were beyond the scope of the theory. Subsequently, several geometric interpretations of L-systems were proposed with a view to turning them into a versatile tool for plant modeling. Throughout this book, an interpretation based on turtle geometry is used. Basic notions related to L-system theory and their turtle interpretation are presented below.

Flower field generated with stochastic L-systems